Tech-Notes

Blog de notas sobre Software, Ingeniería y Tecnología

Project maintained by matiaspakua Hosted on GitHub Pages — Theme by mattgraham

Modern Software Engineering: Doing What Works to Build Better Software Faster

Table of Contents

- 1.Introduction

- 2. What is Engineering?

- 3. Fundamentals of an Engineering Approach

- 4. Working Iteratively

- 5. Feedback

- 6. Incrementalism

- 7. Empiricism

- 8. Being Experimental

- 9. Modularity

- 10. Cohesion

- 11. Separation of concerns

- 12. Information Hiding and Abstraction

- 13. Managing coupling

- 14. The tools of an engineering discipline

- 15. The modern software Engineer

- References

Introduction

- Engineering: The practical application of science. Software engineer need to become experts at learning.

- What is software Engineering? Software engineering is the application of an empirical, scientific approach to finding efficient, economic solutions to practical problems. We must become experts at learning and experts at managing complexity.

- To become experts at “learning” must apply:

- Iteration

- Feedback

- Incrementalism

- Experimentation

- Empiricism

- To become experts at “managing complexity” we need to following:

- Modularity

- Cohesion

- Separation of concerns

- Abstraction

- Loose coupling

- The following are practical tools to drive an effective strategy:

- Testability

- Deployability

- Speed

- Controlling variables

- Continuous delivery

2. What is Engineering?

- The first software engineer: Margaret Hamilton. Her approach was the focus on how thinks fail - the ways in which we get thinks wrong: "failing safely". The assumption is that we can never code for every scenario, so how do we code in ways that allow our systems to cope with unexpected and still make progress?

- Managing complexity: Edsger Dijkstra said: “The art of programming is the art of organizing complexity.”

- Repeatability and Accuracy of measurement: engineering-focused team will use accurate measurement rather than waiting for somethings bad to happen.

- Engineering, creativity and craft: taking an engineering approach to solving problems does not, in any way, reduce the importance of skill, creativity and innovation.

- Design engineering is a deeply exploratory approach to gaining knowledge.

- Trade-offs: one of the key trade-off that is vital to consider in the production of software, is “coupling”.

3. Fundamentals of an Engineering Approach

- The important of measurements: The most important concepts are stability and throughput. Stability is tracked by:

- Change Failure Rate

- Recovery Failure time

- Throughput is tracked by:

- Lead time: the time needed to go from idea to working software.

- Frequency: how often we go to production.

- Throughput is a measure of a team’s efficiency at delivering ideas, in the form of working software.

- Is important to improve this measure to make decisions based on evidence.

- Foundations: so, we need to become experts in learning and managing complexity.

- Expert at learning:

- Working iteratively

- Employing fast, high-quiality feedback

- Working incrementally

- Being experimental

- Being empirical

- Expert at managing complexity:

- Modularity

- Cohesion

- Separation of concerns

- Information hiding/abstraction

- Coupling

Optimize for Learning

4. Working Iteratively

- Iteration allows us to learn, react and adapt. It’s at the heart of all exploratory learning and is fundamental to any real knowledge acquisition.

- Working iteratively encourage us to think in smaller batches and to take modularity and separation of concerns.

- Iterate, learn, adapt to new tech: as Kent’s says in this book: "Embrace change!”.

- REFERENCE: my notes of Extreme Programming: XP

5. Feedback

- Importance: without feedback, there is no opportunity to learn. Feedback allows us to establish a source of evidence for our decisions.

- Feedback in coding: with UNIT TEST!!!!

- Feedback in Integration: Continuos Integration (CI) is about evaluating every change to the system along with every other change to the system as frequently as possible, as close to “continuously”.

- Feedback in design: TDD as a practice, is what give us most feedback in design. If the test are hard to write, that tells that somethings important about the quality of the code and the design.

- Feedback in product Design: adding telemetry to our systems that allows us to gather data about which features of our systems are used, and how they are used, is now the norm.

- Modern times: we are going to “Business and IT” ==> “Digital Business”. The telemetry can provide insights into what customer wants, needs and behaviour that event the customers themselves are not conscious of.

- Feedback in Organization and Culture: All this concepts of feedback are generally not apply in the Organization and in the culture. When people apply this kind of approach they get much better result: “Lean Thinking” => The Toyoya Waty is an example of this.

6. Incrementalism

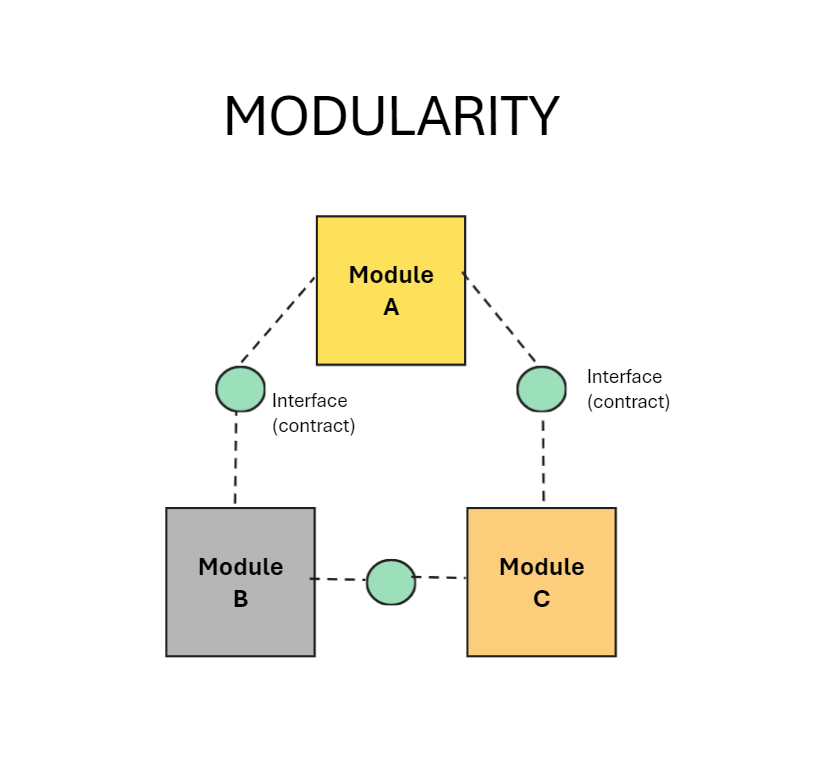

- Definition: incremental design is directly related to any modular design application, in which components can be freely substituted if improved to ensure better performance.

- Importance of Modularity: divide the problem into pieces aimed at solving a single part of the problem.

- Organizational incrementalism: One of the huge benefits that modularity brings is “isolation”. Modular organizations are more flexible, mode scalable and more efficient.

- Tools: Feedback, experimentation, Refactoring, Version control, testing. A test-driven approach to automated testing demand that we create mini executable specifications for the changes that we make to our systems. Keeping the test as simple as we can and by designing our system as testeable code.

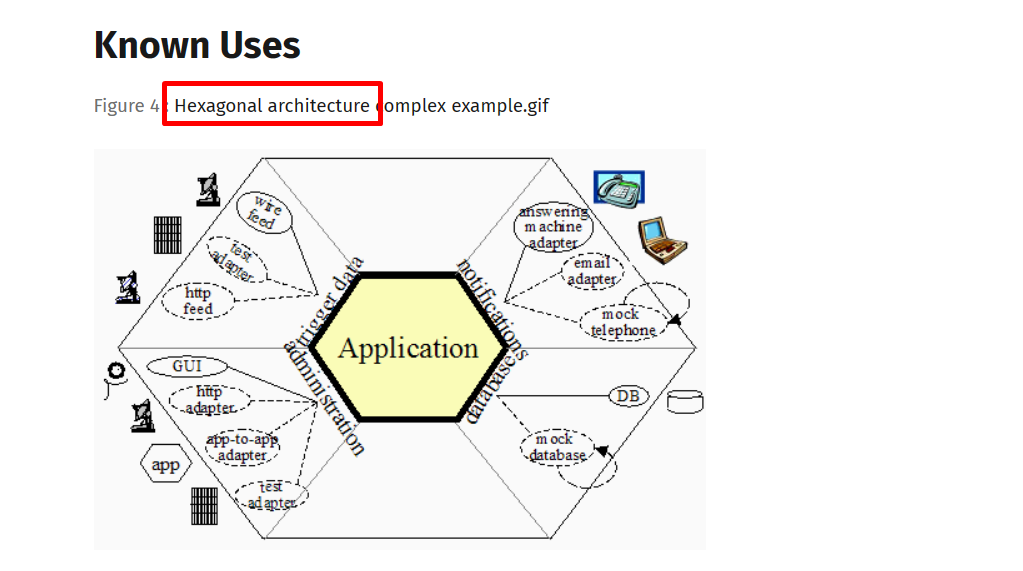

- Limit the impact of change: Applying patterns as: Port & Adapter. At any interface point between two components of the system that we want to decouple, a port, we define a separate piece of code to translate inputs and outputs, the adapter.

- REFERENCE: Hexagonal Architecture: Hexagonal architecture – Alistair Cockburn

- Speed of feedback: the fastest, better.

- Incremental design: the agile concepts is based on the premise that we can begin work before we have all the answers. Accepting the we don’t know, doubting what we do know, and working to learn fast is a step from dogma toward engineering.

- Avoid over-engineering: never add code for thinks that I don’t know are needed now. The important concept with code is that code need to be simple and small, that allows me to change when I learn new thinks.

7. Empiricism

- Definition: Empiricism, in the philosophy of science, is defined as “emphasizing evidence, especially as discovered in experiments”.

- I know that Bug!: Science works!. Make a hypothesis. Figure out how to prove or disprove it. Carry out the experiment. Observe the results and see they match your hypothesis. Repeat.

- Avoid Self-deception: “The first principle is that you must not fool yourself and you are the easiest person to fool.”

- Guided by Reality: the best way to start is to assume that what you know, and what you think, is probably wrong and then figure out how you could find our how it is wrong.

8. Being Experimental

- Definition. Richard Feyman says “Science is the belief in the ignorance of experts”. “Have no respect whatsoever for authority; forget who said it and instead look what he start with, where he ends up, and ask yourself, ‘it is reasonable?’”.

- Hypothesis, Measurement and Controlling the Variables. To gather feedback and make useful measurements, we need to control the variables.

- Experiments: we can run literally millions of experiments every second if we want, using unit tests. What I am thinking of is organizing our development around a series of iterative experiments that make tiny predictions.

- Creating new knowledge: we can create a new experiment, a test, that define the new knowledge that we expect to observe, and then we can add knowledge in the form of working code that meet that needs.

Optimize for Managing Complexity

9. Modularity

- Good design: the cornerstones are modularity and separation of concerns. How can we create code and systems that will grow and evolve over time but that are appropriately compartmentalized to limit damage if we make a mistake?

- The importance of testability: if our test are difficult to write, it means that our design is poor.

- Improve modularity: we need to be clear what it is that we are measuring, and we need to be clear of the value of our measurements. We need to identity if the system the “points of measurements.” The key is to understand the scope of measurement that make sense and work to make those measurements easy to achieve and stable in terms of the results that they generate.

- Service and modularity: one of the most important aspects is “information hiding”, this is one the essences of design. The concept of a “service” in software terms is that it represent a boundary.

- Deployability and modularity: accomplish this independence is to take the modularity of the system so seriously that each module is, in terms of build, test and deployment, independent from every other module.

- Modularity at different scale: Testing when done well exposes something important and true about the nature of out code, the nature of our design, and the nature of the problem that we are solving.

- Modularity in Human System: truly decouple system, we can parallelized all we want. Micro-services are an organizational scalability play; they don’t really have any other advantage, but let’s be clear, this is a big advantage if scalability is your problem. If we need small team to efficiently create good, high-quality work, then we need to “decouple”. We need modular organizations as well as modular software.

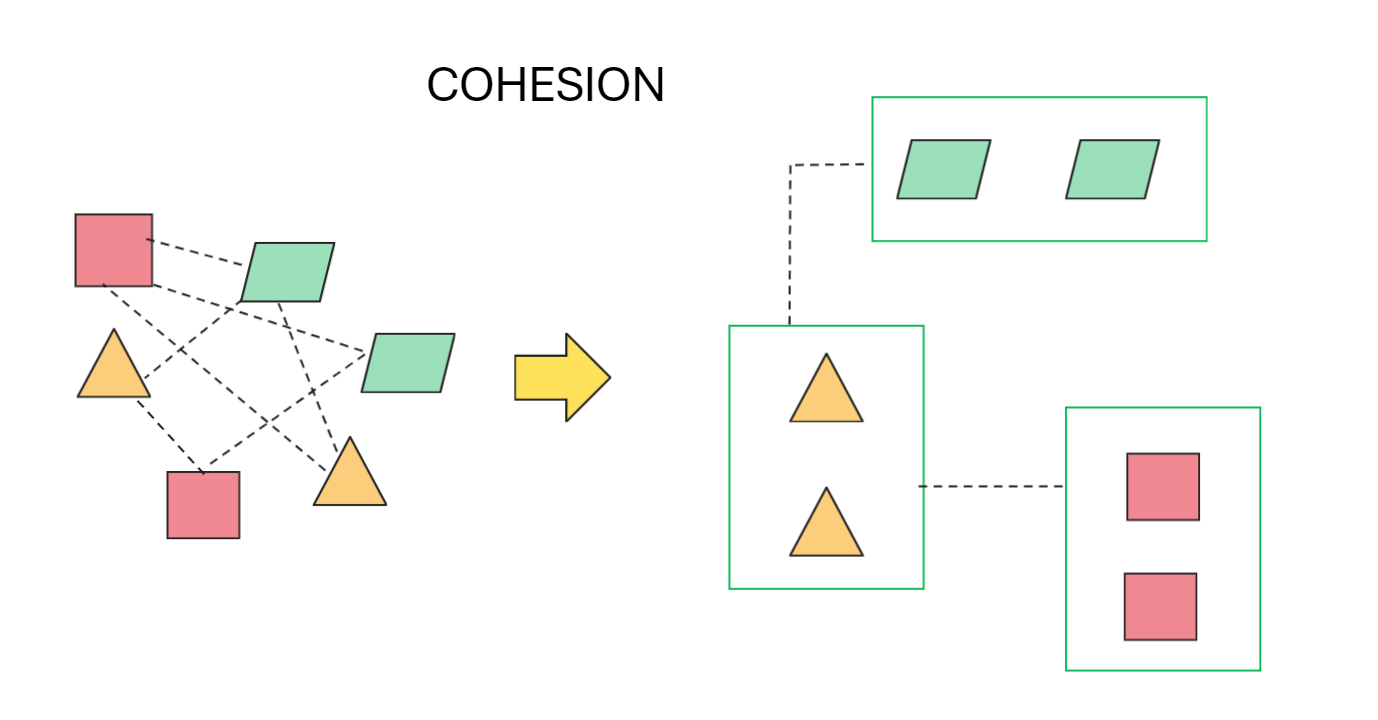

10. Cohesion

- Modularity and cohesion: good design in software is really about the way in which we organize the code in the system that we create.

- **The primary goal of code is to communicate ideas to humans**.

- Context matters: one effective tool to drive this kind of decision making is domain driven design (DDD).

- High-Performance Software: to achieve this, we need to do the maximum amount of work for the smallest number of instructions.

- Link to coupling: Coupling: given two lines of code, A and B, they are coupled when B mush change behavior only because A changed. Cohesion: the are cohesive when change to A allows B to change so that both add new value.

- Cost of poor cohesion: there is a simple, subjective way to spot poor cohesion. If you have ever read a piece of code and thought “I don’t know what this code does,” it is probably because the cohesion is poor.

- Cohesion in human system: one of the leading predictors of high performance measured in terms of throughput and stability, is the ability of teams to make their own decisions without the need to ask permission of anyone outside the team.

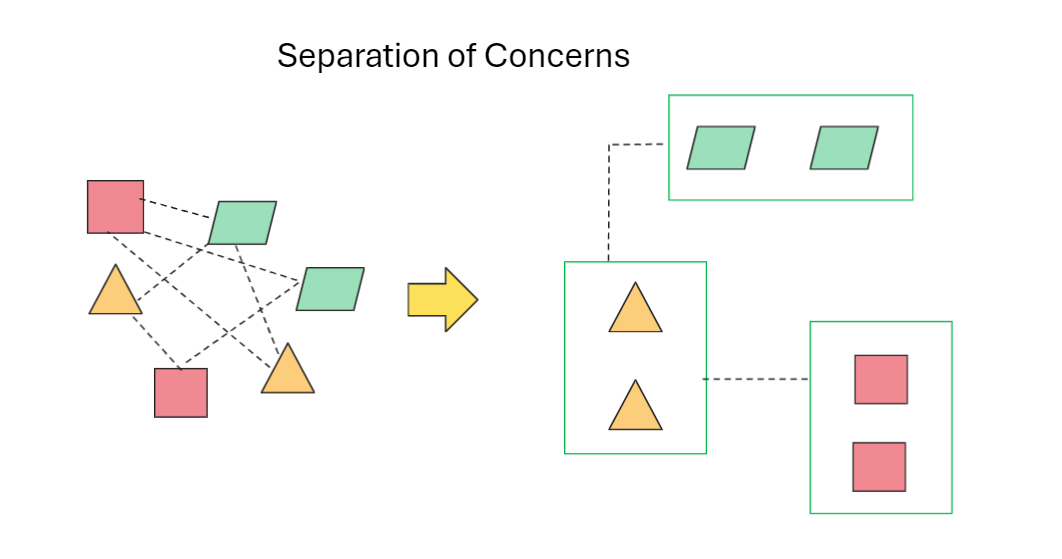

11. Separation of concerns

- Definition: is defined as “a design principle for separating a computer program into distinct sections such that each section addresses a separate concern”. Stuff that is unrelated is far apart, and the stuff that is relates is close together.

- Dependency Injection: is where dependencies of a piece of code are supplied to it as parameters, rather that created by.

- Essential and Accidental complexity: The Essential complexity of a system is the complexity that is inherent in solving the problem that you are trying to solve. The accidental complexity is everything else, the problems that we are forced to solve as a side effect of doing something useful with computer.

- Testeability: if we work to ensure that our code is easy to test, the we must separate the concern or our test will lack focus.

- Ports and adapters: Is best fit when translade information that crosses between bounded contexts.

- What is an API. Application Programming Interface (API) is all the information that is exposed to consumers of a service, or library, that expose that API. In combination with ports and adapters create the consistent level of abstraction. The rule is: always add Ports and Adapters where the code that you talk to is in different scope of evaluation.

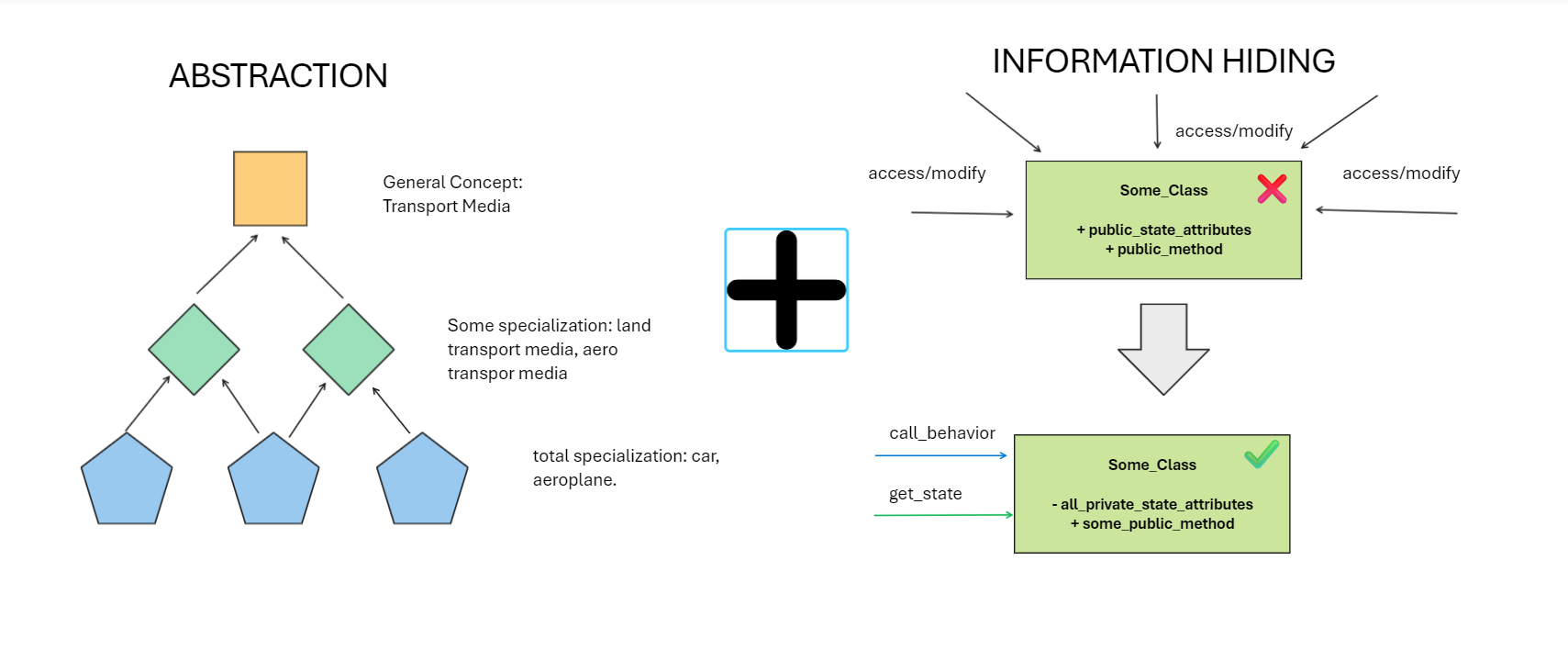

12. Information Hiding and Abstraction.

- Information hiding: its based on hiding the behavior of the code. It includes implementation details as well as any data that it may or may nor use.

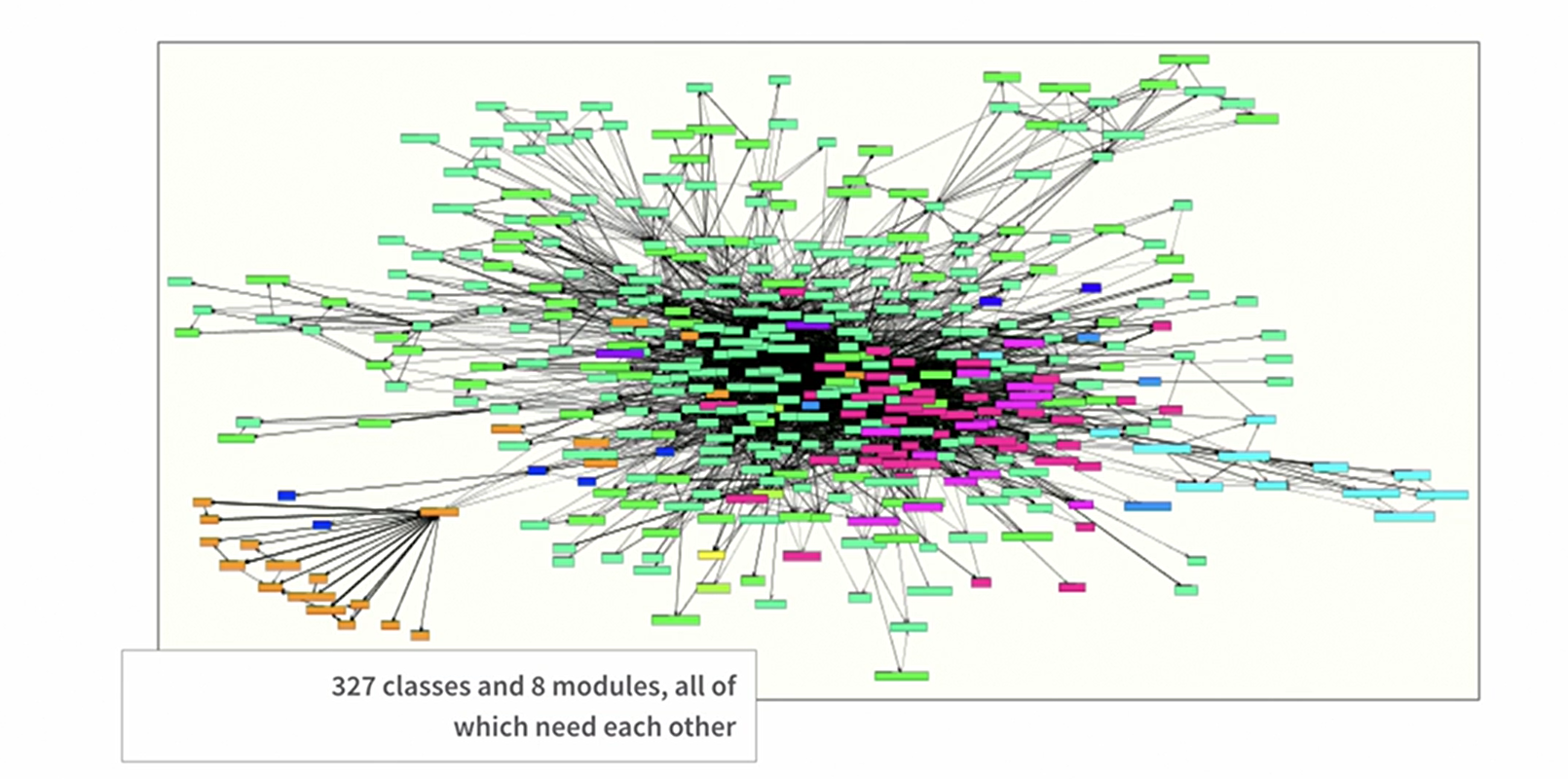

- The Big balls of mud problem. The first point are the Organizational and cultural problems. Here, the main and most important advice is that we need to own the responsibility for the quality of the code that we work on.

-

Organizational and cultural problems: we need to own the responsibility for the quality of the code that we work on. It is our duty of care to do a good job. Our aim should be to do whatever it takes to build better software faster. This is improve QUALITY. The foundation of a professional approach are: refactor, test, take time to create great designs, fix bugs, collaborate, communicate and learn.

-

Technical problems and problems design and the Fear of over engineering: We need to apply the approach that when we design our solution (code), we can return to it at any point in the future when we have learned something new and change it. Here the main problem to fix is the FRAGILITY OF OUR CODE. We have three approach to this: 1) the HERO-Programmer model (exist a super programmer that can do and fix everything); 2) Abstraction and 3) Testing. This is abstract = hiding the complexity and testing = validate the solution.

-

Improve abstraction through testing: apply testability on design, this is writing the specification (test) like an act of design. This is a practical, pragmatic, light weight approach to design by CONTRACT.

-

Abstractions from the problem domain: The "thinking like a Engineer" means: thinking about the ways in which thinks can go wrong.

- Isolate Third-Party systems and code: always insulate your code from third-party code with your own abstractions. Thinks carefully about what you allow “inside” your code. For example, inside our code only allow language concepts that are native, nothing outside this.

- Always prefer to hide information. Period.

13. Managing coupling

Def. Coupling is defined as “the degree of interdependence between software modules; a measure of how closely connected two routines or modules are; the strength of the relationships between modules”.

- Cost of coupling: The real reason why attributes of our systems like MODULARITY, and COHESION and techniques like ABSTRACTION and SEPARATION OF CONCERNS matter is because they help us reduce the “coupling” in our systems. So, in general, we should aim to prefer looser coupling over tighter coupling.

- Scaling Up. There is a fairly serious limit on the size of a software development team, before adding more people that only will slows down. To decrease this problems, the team needs a coordination as efficient as possible, and the best way to do this is continuous integration. ==> see also the Conway’s law: “any organization that design a system will produce a design whose structure is a copy of the organization’s communication pattern.”

- Microservices: the general concept is to reduce the level of coupling. To do this, the need to be:

- Small

- Focused on one task

- Aligned with a bounded context.

- Autonomous

- Independently deployable ==> This is the KEY defining characteristic of a microservice.

- Loosely coupled.

- Also is important to mention that Microservices is an organizational scaling pattern.

- Decoupling may mean mode code. With this in the table: we should optimize for thinking, not for typing!!!!.

- Loose coupling isn’t the only kind that matters: The Nygard model of coupling:

| Type | Effect |

|---|---|

| Operational | A consumer can’t run without a provider |

| Developmental | Changes in producers and consumers must be coordinated |

| Semantic | Change together because of shared concepts |

| Functional | Change together because of shared responsibility |

| Incidental | Change together for no reason (breaking API contract) |

- Prefer loose coupling. to achieve high performance, the code need to be simple. Efficient code that can be easily and, even better, predictably ,understood by our compilers and hardware.

- DRY is to simplistic. DRY is short for “Don’t repeat yourself”. It is a short hand description of our desire to have a single canonical representation of each piece of behavior in our system.

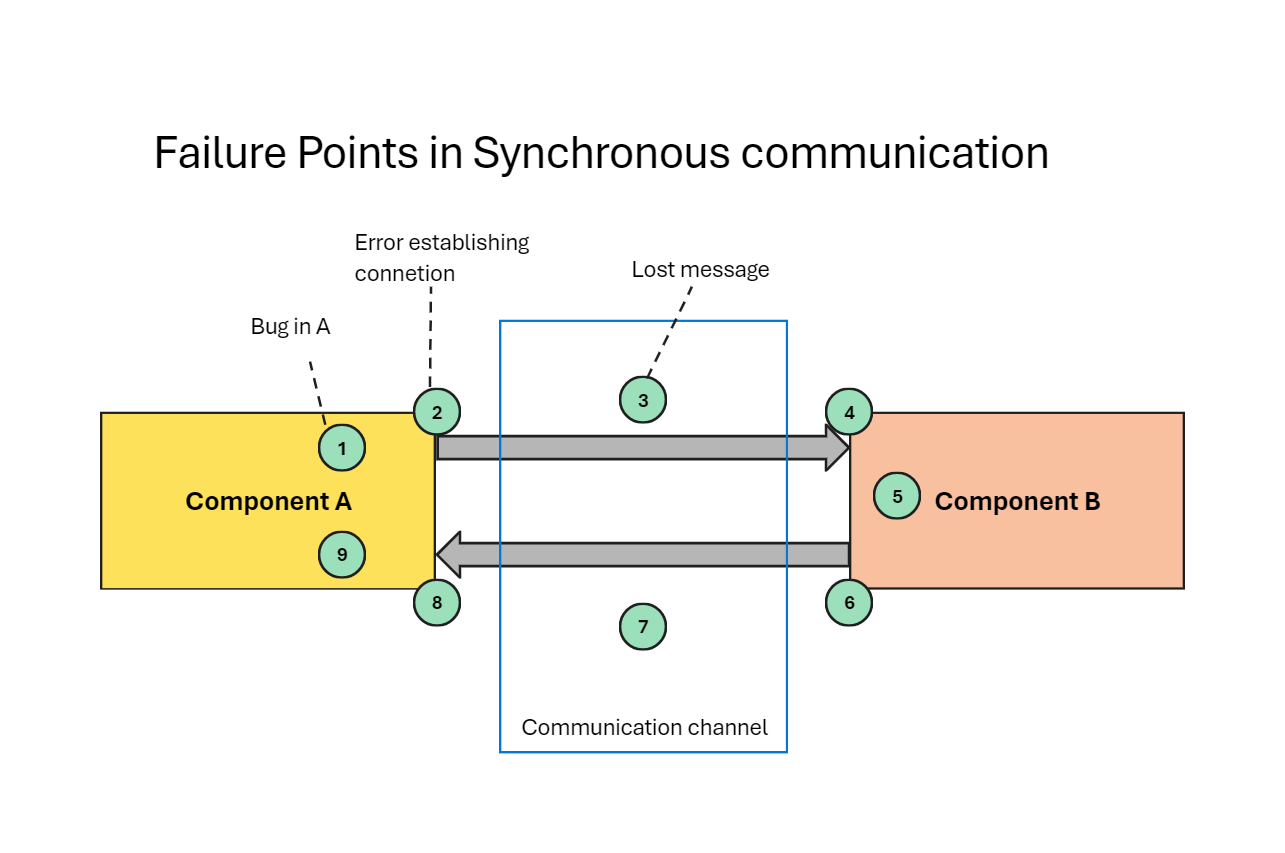

- ASYNC as a tool for loose coupling: we prefer async where is possible, because of the great quantity of fail points in a SYNC pattern. Example:

- Loose coupling human system: CI is built on the idea of optimizing the feedback loops in development to the extend that we have, in essence, continuous feedback on the quality of our work.

- Summary: high quality code can be achieve in three ways:

- Design more decoupled systems.

- Work with interfaces (contracts)

- Get feedback quickly to identify problems early.

Tools to support Engineering in Software

14. The tools of an engineering discipline

- What is software development? Solve problems, and build some checks into our process before dive into production. This checks are the "test".

- Testability as a tool. if we are going to test our software, then the it makes sense that, to make out lives easier, we should make out software easy to test.

- Designing to improve the testability of our code makes us design higher quality code.

- Measurement points: If the want out code to be testeable, we need to be able to control the variables. This is, a measurement point is a place where we can examine the behavior of out system without compromising its integrity.

Example: calculator.

public class Calculator {

public int add(int a, int b) {

return a + b;

}

public int subtract(int a, int b) {

return a - b;

}

public int multiply(int a, int b) {

return a * b;

}

public double divide(int a, int b) {

if (b == 0) {

throw new IllegalArgumentException("Cannot divide by zero");

}

return (double) a / b;

}

}

Let’s break down why this code is easy to test:

-

Modularity: Each method in the

Calculatorclass performs a specific operation (addition, subtraction, multiplication, and division). This makes it easier to isolate and test individual behaviors. -

Clear Input and Output: Each method takes input parameters and returns a result. This makes it straightforward to provide inputs and verify outputs during testing.

-

No External Dependencies: The methods in this class do not rely on external resources or dependencies, such as databases or network connections. This simplifies testing because you don’t need to set up complex environments for testing.

-

Error Handling: The

dividemethod includes error handling to handle division by zero. This ensures that the method behaves predictably in all scenarios and allows for testing of edge cases.

Now, let’s write some unit tests using JUnit to verify the behavior of these methods:

import org.junit.Test;

import static org.junit.Assert.*;

public class CalculatorTest {

@Test

public void testAdd() {

Calculator calculator = new Calculator();

int result = calculator.add(3, 5);

assertEquals(8, result);

}

@Test

public void testSubtract() {

Calculator calculator = new Calculator();

int result = calculator.subtract(10, 4);

assertEquals(6, result);

}

@Test

public void testMultiply() {

Calculator calculator = new Calculator();

int result = calculator.multiply(2, 3);

assertEquals(6, result);

}

@Test

public void testDivide() {

Calculator calculator = new Calculator();

double result = calculator.divide(10, 2);

assertEquals(5.0, result, 0.0001); // delta is set to account for floating point precision

}

@Test(expected = IllegalArgumentException.class)

public void testDivideByZero() {

Calculator calculator = new Calculator();

calculator.divide(10, 0);

}

}

In this test code, we can identify the measurements points:

- Input:

aandbare the input parameters. - Output: The quotient of

adivided bybis returned as the output. - Exception Handling: If

bis zero, anIllegalArgumentExceptionis thrown.

- Problems with achieving testability. technical difficulties, cultural problems, difficult at the edges of our system. The most difficult problem always ends to be: "people".

- How to improve Testability. If the test before you is difficult to write, the design of the code that you are working with is poor and needs to be improved.

- Deployability. CD is on the idea of working so that our software is always in a releasable state. The other concept here is “releasebility”, which implies some features completeness and utility to users. The difference is that “deployability” means that the software is safe to release into production, even if some features are not yet ready for use and are hidden in some ways.

- Controlling the Variables. this means that we want the same result every time that we deploy our software.

15. The modern software Engineer.

- What’s make a modern software engineer? Testability, deployability, speed, controlling the variables and continuous delivery.

**Engineering as a human process**. Engineering is the application of an empirical , scientific approach to finding efficient, economic solutions to practical problems.

- Digitally disruptive organizations. Control the variables, keep relates ideas close together with cohesion, and keep unrelated ideas apart with modularity, separation of concerns, abstraction, and reductions in coupling.